In times of rapid internet connections, we all got used to fast responses, when it comes to web or mobile applications. Some studies show that the time that users need to wait for the website is crucial and has a direct impact on user experience, and thus on the generated income.

Prefetching the data seems to be a good way to reduce the latency. Here, we present a bunch of methods that may be useful to achieve that.

What is the problem?

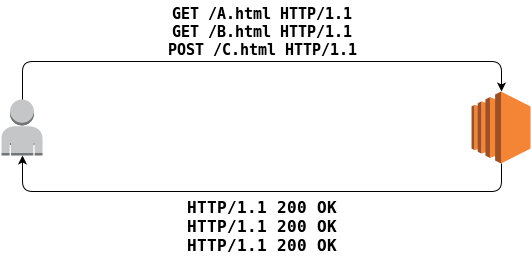

A typical web application is built over the HTTP protocol - every request sent by a user is processed by some kind of a web server, which produces a response and sends it back to the user. Initially, one TCP connection was used per each HTTP connection. It changed when persistent connections were introduced and currently almost all modern browsers use them to avoid establishing and terminating too many short-lasting ones.

HTTP pipelining is another great feature that allows reducing the latency dramatically, but even though it is supported by the majority of HTTP servers, it is rarely enabled in browsers by default, so most of the users do not use it.

These both enhancements share the same idea - the aim is to reduce the time that a person visiting a website spends on waiting for the requested resources. Why don’t go a step further then? If we could prefetch the resources a particular user will want to use in their next steps, before they even send a request to receive them, then the delays will never occur. It is especially meaningful for mobile devices, which do not always have a good internet connection, and having the data already stored could be crucial for user experience. Facebook has put a related issue on its list of top open data problems.

User modeling

Perfectly, we could store a copy of all the data gathered by our system on each device that uses it and update whenever possible, but it is obviously not a good solution. It is pointless to store something that users will never access or that is simply not interesting to them. Preparing such data will also lead to efficiency issues and higher network traffic, so there is a need to find a tradeoff between the amount of prefetched data and the computational power used to generate it. To make such a process efficient we need to decide what is important for a particular person, or a whole group of users.

That’s where the user behavior modeling might be useful. If a system is used daily (e.g. social media or working tools), users tend to perform similar actions. By recognizing these usage patterns we could predict what they would like to do next, and do not even wait for a request to perform this action. There are a couple of methods used to achieve that.

Content-based prediction

From the users’ perspective, an application delivers some kind of documents and allows to perform a limited set of actions. These documents may be directly connected or share a similar topic. Georgakis and Li (see references) describe the algorithm which uses HTML hyperlinks as relations between the documents and their content to identify the subject.

Whenever a user visits a page, all the outbound links are being collected with the surrounding text. This context of a single link is thought to describe the related document in terms of its subject. The content of the document is then cleaned from unnecessary HTML tags, punctuation marks as well as common words which are not meaningful, to extract only relevant information.

The next phase is stemming which helps to extract the informative context by word suffixes removal. Based on a low-to-medium law, which claims the less frequent the term the more informative it is, the least informative terms are eliminated to reduce the dimensionality. Instead of single stems, the bigram model is used, since collocations can describe more complex concepts than single words only. The bigrams are defined for each link separately.

Applying the above procedure helps to describe the documents in terms of their content, by using the context of links pointing to them, and are used further to find the important factors of the bigrams. Each user has its profile, which is used to store the keywords that they find interesting. Documents are considered to be interesting for the particular user if they have already visited a document having similar bigrams.

The prefetching is done after applying weights to each outbound link on a subpage. These weights are calculated based on this particular link keywords and user preferences. The authors claim the reduction of latency from 25% up to 75%, depending on the number of prefetched documents, but with the cost of increasing the bandwidth overhead.

Statistical modeling of interaction

Another way to look at the problem of user behavior modeling is to analyze the HTTP requests. The majority of web servers collect access logs, which are usually well-structured plain text documents and may be processed further to extract the information about the precedence of sent HTTP requests. A time-ordered list of such requests, coming from the same user within a closed time range, may be defined as a session. Assuming that a particular user interacts with the application similarly over time, we should simply notice repeating patterns in the log.

There are many applications of data mining of machine learning techniques to such pattern recognition.

A Markov chain is a random process with a finite set of states and transitions between them. The probability of the next state depends only on the previous state - it is a fulfillment of Markov property. For the n-th order Markov chain, the state depends on n previous states. A Hidden Markov Model is a random process, but with unobservable states and observations, which are state-dependent and visible. Similar to Markov chains, we need to have the probabilities of state-to-state transitions, but additionally some probability distribution of observations while being in a particular state.

Meera Narvekar and Shaikh Sakina Banu (see references) propose an architecture built on top of these two models to predict the next action that the user will perform. They achieved ~30% accuracy on raw data taken from the NASA access log.

N-gram is a popular statistical model, an n items sequence, usually of some linguistic unit. Despite the fact such an architecture is mainly used in computational linguistic, there are some attempts to adapt it to different problems as well. Zhong Su et al. (see references) proposed to use it to model users’ behavior in the case of web application interaction. Once again, they suggest using web server logs, divided into users’ sessions as a learning dataset. They claim that short-lasting sessions have rather random flow and hardly predictable requests, so making any predictions should be performed only on long enough visits.

Due to this assumption, they extended the standard n-gram model into n-gram+, which operates on sequences of any length greater than n. As an input, it takes the whole sequence of user interactions and tries to find the longest subsequence that matches its knowledge database, the statistics of the historical observations, and find the most probable action that will be performed after. If a given path has been never observed before, the first element of this sequence is removed and the algorithm tries to find the next action for one item shorter path. In the case of sequences shorter than n, no prediction is performed. It means that short sessions are not even considered in this process.

The goal of the research, the authors performed, was to find a good tradeoff between the precision and the applicability of the created model. By applicability, they define a fraction of requests which may be predicted by the system. The tests were performed on the same NASA dataset and showed that for traditional n-grams the precision grows with the value of n, but the applicability decreases drastically. Using the n-gram+ model leads to a good compromise between these two figures. Finally, they achieved ~60% precision for the 3-gram+ model, which is slightly lower than for 4-gram alone, but about 10% better than standard 3-gram, with the maintained applicability.

The problem of user behavior modeling for prefetching is just the tip of the iceberg. There are many related problems, like adaptive interfaces, recommendation engines, and content personalization which may directly result in a revenue increase of our business. There is quite a big niche for such systems, but presented architectures seem to be really good starting points for further investigation.

References:

- A. Georgakis, H. Li, “User behavior modeling and content based speculative web page prefetching”, Data & Knowledge Engineering 59 (2006) 770–788

- M. Narvekar, S. S. Banu, “Predicting User’s Web Navigation Behavior Using Hybrid Approach”, Procedia Computer Science 45 ( 2015 ) 3 – 12

- Z. Su, Q. Yang, Y. Lu, H. Zhang, “A Prediction System for Web Requests using N-gram Sequence Models”, Proceedings of the First International Conference on Web Information Systems Engineering (WISE'00)-Volume 1, p.214, June 19-20, 2000